A Reevaluation of Assessment Center Construct-Related Validity

- Milton Cahoon

- Mark Bowler

- Jennifer Bowler

Abstract

Recent Monte Carlo research (Lance, Woehr, & Meade, 2007) has questioned the primary analytical tool used to

assess the construct-related validity of assessment center post-exercise dimension ratings (PEDRs) – a

confirmatory factor analysis of a multitrait-multimethod (MTMM) matrix. By utilizing a hybrid of Monte Carlo

data generation and univariate generalizability theory, we examined three primary sources of variance (i.e.,

persons, dimensions, and exercises) and their interactions in 23 previously published assessment center MTMM

matrices. Overall, the person, dimension, and person by dimension effects accounted for a combined 34.06% of

variance in assessment center PEDRs (16.83%, 4.02%, and 13.21%, respectively). However, the largest single

effect came from the person by exercise interaction (21.83%). Implications and suggestions for future

assessment center research and design are discussed.

- Full Text:

PDF

PDF

- DOI:10.5539/ijbm.v7n9p3

Journal Metrics

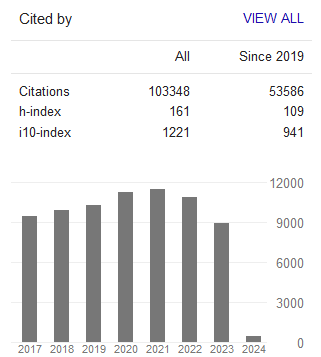

Google-based Impact Factor (2023): 0.86

h-index(2023): 152

i10-index(2023): 1168

Index

- Academic Journals Database

- AIDEA list (Italian Academy of Business Administration)

- ANVUR (Italian National Agency for the Evaluation of Universities and Research Institutes)

- Berkeley Library

- CNKI Scholar

- COPAC

- EBSCOhost

- Electronic Journals Library

- Elektronische Zeitschriftenbibliothek (EZB)

- EuroPub Database

- Excellence in Research for Australia (ERA)

- Genamics JournalSeek

- GETIT@YALE (Yale University Library)

- IBZ Online

- JournalTOCs

- Library and Archives Canada

- LOCKSS

- MIAR

- National Library of Australia

- Norwegian Centre for Research Data (NSD)

- PKP Open Archives Harvester

- Publons

- Qualis/CAPES

- RePEc

- ROAD

- Scilit

- SHERPA/RoMEO

- Standard Periodical Directory

- Universe Digital Library

- UoS Library

- WorldCat

- ZBW-German National Library of Economics

Contact

- Stephen LeeEditorial Assistant

- ijbm@ccsenet.org